In January 2021, WebRTC (Web Real-Time Communications) became an official web standard, which is a significant milestone for this open-source project that was released by Google in 2011.

The purpose of WebRTC is to enable real-time media communications like voice, video and data transfer between browsers and devices. During the Covid-19 pandemic, WebRTC became increasingly important by keeping the world connected.

(Photo provided by Justin Uberti)

To gain more insight into this amazing project, we had an email interview with Justin Uberti, one of the creators of WebRTC, and now the head of streaming technology at Clubhouse. In this interview, Justin discusses how he started the WebRTC journey, the challenges he and his team faced, inspirations he drew from this project and his expectations for the future of WebRTC.

This year, Justin left Google after working there for 15 years, and joined Clubhouse. Justin tells us the reason for his leaving and that he has fully adjusted to his new role and quite enjoys what he is doing at Clubhouse.

In addition to WebRTC and career change, Justin also shares his love for math and computers, and what he would like to tell his younger self: to appreciate the value of compounding.

The following is our conversation with Justin Uberti.

Early days

LiveVideoStack: Hi, Justin, thank you for being here with us. Let’s start at the very beginning, when you were very young, what did you want to be? Did you expect to be a software engineer like what you are now? What was your childhood like?

Justin Uberti: My parents were both professors at local small colleges and although neither one was especially tech-savvy, their encouragement was an important part of my interest in tech.

When I was 6 or so, my dad brought home a new Texas Instruments home computer. That officially set the wheels in motion for what would become a bit of a deep interest in computing, and this interest was deepened when a couple years later I came across the initial game releases from Electronic Arts. The games were fun, but EA in the early days went out of their way to feature their developers as "rock stars", and, well, that seemed like exactly what I wanted to be. I still have a "career goal" worksheet from when I was 9 or 10 where I said I wanted to be a software developer.

LiveVideoStack: Did mathematics play an important part in your life when you were young? What about computers?

Justin Uberti: Despite my interest, computers were always kind of a hobby, you could say. There were clubs and camps and that sort of thing, but I was very focused on math for my official studies as well as the math competitive circuit, Mathcounts, AIME, etc. Along the way I came to the conclusion that math was going to be my long-term career track.

LiveVideoStack: At University of Virginia, you were a Mathematics and Physics major, but you became a software engineer after graduation. What or who inspired you to enter a tech company? Did you ever think about getting a master’s degree or PhD?

Justin Uberti: In my final year at UVA (1995), I went to see the head of the Physics department about some potential opportunities after graduation. He pulled them up on his web browser, which was the first time I had ever seen the web, and I was instantly captivated. I spent a lot of time trying to understand how it worked, and started teaching myself C++, so I could actually start working with this nascent technology. It also started to become increasingly obvious to me there might be a lot more opportunities in this field than in math proper.

Regardless, I was accepted to and enrolled in a math PhD program at NYU, but between my renewed interest in tech and changes in my personal life (my father passed away right after I graduated from UVA) I soon decided to leave NYU and enter the tech world.

(Photo provided by Justin Uberti)

LiveVideoStack: Thinking of your younger self, what would you like to tell yourself when you were in college?

Justin Uberti: I like to reflect a lot, so I have a number of thoughts here. That said, the main thing I would tell myself would be to appreciate the value of compounding. I often looked at eminent people in the field and wondered how I would ever be able to do anything remotely comparable. But the truth is that those folks that I looked up to just set a goal and worked toward it every day. Over the course of a few years, that sort of good-work-every-day can turn into a really outstanding accomplishment.

Also, I would have told myself to learn how to type properly. I didn't do that until much later in my career!

WebRTC

LiveVideoStack: What led you to work on WebRTC? What role did you play on the WebRTC team?

Justin Uberti: I had been interested in voice and video communications for several years, starting with the launch of AIM video chat in 2004 and then leading video technology development for Gmail video chat and Google Hangouts. I definitely recognized the complexity of this technology and how difficult it was to develop, and I was very interested in the prospect of creating an open platform that would allow more applications to make use of this technology.

At Google, I did some of the first investigations into what WebRTC browser APIs could look like, based on my prior work to develop browser-based RTC applications like Google Hangouts. I eventually became the overall engineering lead and manager for the WebRTC team, where I worked closely with my PM counterpart, Serge Lachapelle, and wore a number of hats, ranging from writing standards documents to doing developer outreach to developing sample code to authoring some of the key components in our stack.

LiveVideoStack: What’s the most challenging part of creating WebRTC? How did you deal with it?

Justin Uberti: Two parts stand out to me:

First, just the sheer scope and complexity. WebRTC itself has close to 1000 API points, implements over 100 IETF RFCs, and is over 1M lines of code. We knew this was going to be a heavy lift from the outset, but none of us realized that it was going to take almost a decade to work through all the various aspects.

Second, doing things in an open, consensus-based environment where the various players all have their own interests and motivations introduces its own complexity. I'm pleased with the results we were able to achieve, but I was somewhat inexperienced in this area at the outset and had to work hard to figure out how to navigate critical issues like, say, which video codecs WebRTC should use. Harald Alvestrand (from our team at Google) was a key resource here.

LiveVideoStack: What does WebRTC mean to you? Do you have any regrets about it?

Justin Uberti: I think it means a lot to everyone who worked on WebRTC from the beginning. I think we were able to really deliver on our vision of building a high-performance, flexible, open and secure RTC platform. And then during COVID things really took off as WebRTC played an essential role in keeping people connected. Looking forward, it's clear that online communications is the new way of working in many industries, and there are tons of new startups doing interesting stuff with RTC. So yeah, I think there's a lot for everyone involved to be proud of.

As to regrets, not much to say there. There are definitely cases where we made the wrong initial choice and then had to backtrack on it, and this made life hard for early WebRTC adopters. And some things are still too complicated. But that's pretty much every project.

LiveVideoStack: What capabilities do you expect to be included in the next version of WebRTC?

Justin Uberti: Since I left Google, I don't have the same insight into the future roadmap. But there's been considerable activity around Insertable Streams, as well as the new Capture Handle proposal, and also a new data channel implementation. I'm also looking forward to seeing Cryptex (a new security mechanism for RTP metadata) implemented.

LiveVideoStack: Some people say that QUIC is the future of WebRTC, and what do you think? What challenges will WebRTC face in terms of transporting data with QUIC?

Justin Uberti: I think QUIC has a major role to play in the future of WebRTC, and primarily because it's still too hard to build WebRTC servers - there's all this great infrastructure out there for HTTP-based applications, but you have to build WebRTC app servers from scratch. Being able to send WebRTC traffic over QUIC means that it will be much easier to set up a cloud WebRTC endpoint and start sending data to it. It would also be nice to not have to keep developing a completely separate set of transport protocols (and supporting libraries) for WebRTC.

That said, the devil is in the details. How do the different congestion control algorithms in WebRTC and QUIC work together? Should we tunnel RTP in QUIC or map it to QUIC-native concepts? Is QUIC mainly for c2s, or should we consider it for p2p? There's a lot to figure out here.

LiveVideoStack: Technologies based on the WebTransport/WebCodecs/WebAssembly are able to implement an RTC engine (Zoom has done it). How do you see the competition between these new technologies and WebRTC? Will WebTransport replace WebRTC datachannel in near future?

Justin Uberti: I think these technologies are really important for people who want to have more control over the experience they deliver. With WASM, you eventually will be able to take most of the same customized RTC stack you use for native apps and deploy that code to the browser. Or drop in your own custom codecs. But that stack will still typically be built on top of WebRTC, so I see WASM more as extending WebRTC rather than competing with it.

WebTransport is an interesting development as a much simpler stack for client-server applications that just want an unreliable transport (and don't need any of the other WebRTC machinery). I've been very supportive of this work and think Victor Vasiliev (from Google) has been doing a great job here.

LiveVideoStack: This year, Google introduced two AI codecs——Lyra and SoundStream. As you can see, is it possible for these AI codecs to be available on WebRTC one day?

Justin Uberti: I certainly hope so. But to the point above, it would be great to decouple the codec from the WebRTC runtime so that innovation can occur in parallel.

Overall I'm a big believer in AI as the future of compression technology. I think the key question is when do these AI codecs replace our core codecs? Most of the codec experts that I have talked to think we need one more generation of AI codecs.

From Google to Clubhouse

LiveVideoStack: 15 years at Google, you must‘ve met lots of amazing people. Who inspired you most and what’s the most important thing you’ve learned from them?

Justin Uberti: I'll call out two people who influenced me significantly. One is Linus Upson, who was the original executive behind Chrome, and gave the green light to the WebRTC initiative. He helped me think about how you establish a vision for a project and encourage people to overcome seemingly impossible technical obstacles.

The second is Eric Rescorla, who I worked closely with during WebRTC as engineering leads of our respective browsers (Firefox and Chrome). Eric was very focused on robust security for WebRTC and, returning to the theme above, was willing to do whatever it took to make that happen, including submitting several significant patches in our team's (Chrome's) codebase. That sort of full-stack, just-get-it-done mindset really stuck with me.

LiveVideoStack: Among all the work you have done at Google, which part (parts) do you find most satisfying?

Justin Uberti: I've always derived enjoyment from doing technically difficult work that also delivered real value for our users. WebRTC definitely falls into this bucket, but I'd also cite things like porting Stadia gameplay to iOS via a web app, which was a risky bet but ultimately unlocked the ability for people to play Cyberpunk 2077 on an iPad.

LiveVideoStack: Why did you leave Google and join Clubhouse? Do you have a special interest in audio? Have you fully adjusted to this new job?

Justin Uberti: I joined Google when it was much smaller, and really enjoyed how unconstrained the culture was at that point in time. So I was looking for a similar sort of fast-moving environment, and also for a new application domain where there would be a lot to explore. Clubhouse and social audio checked those boxes for me, and I really liked the team as well.

I've spent a lot of time working on both audio and video, but most of our focus at Google was on video because there was so much to do in that area -- HD, multi-user, screen sharing, VP8, VP9, AV1, etc. However, I feel like audio actually got overlooked somewhat during that time and there's actually a number of interesting new directions to pursue in the audio space, e.g., spatial audio, which I've worked on at Clubhouse. So yes, I'd say that I've adjusted and there's a lot of interesting stuff to do!

LiveVideoStack: As you observe, what are the key differences between Clubhouse and Google?

Justin Uberti: The thing that really surprised me was that practically any internal tool that I used at Google now exists on the outside as a SaaS offering - everything from search to bug tracking to hiring. And because of economies of scale, these 3rd party tools are often better than their Google equivalents.

Most of the other differences are the typical ones between a startup and a big company, so not much more to say there. However, it is nice to have a single product and mission which everybody is focused on, and I think that helps everyone accomplish more.

LiveVideoStack: Being the head of streaming technology at Clubhouse, what qualities do you look for when hiring people? Educational background, real-world experience or quick learning ability, which one matters most?

Justin Uberti: These tend to be highly correlated in many cases, i.e., people who can learn quickly often have strong backgrounds and prior work. But quick learning is the most important quality I look for - today's up-and-coming talent is tomorrow's leadership team. We had some incredible hires within WebRTC who were relatively inexperienced at the time.

Quick learning ability.

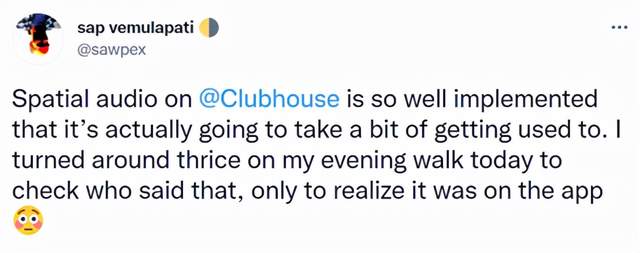

LiveVideoStack: Recently Clubhouse announced the enabling of spatial audio, what have you seen from user feedback about the spatial audio experience? What can we expect from Clubhouse’s next innovations?

Justin Uberti: People have really seemed to enjoy it. Here are a couple tweets that I think capture the feedback especially well:

Without getting into too many details, there's still a lot of potential here.

Today and tomorrow

LiveVideoStack: Currently, what do you think are the biggest limitations of real time communication technologies? Are there any solutions?

Justin Uberti: We're still in the WebRTC/online meetings 1.0 phase. It works, and it works reliably, and that's an enormous accomplishment. But it's still kind of artificial in some respects, and we're not yet able to deliver things that go beyond face-to-face meetings.

One particular pain point that I think everyone can relate to is the mute button. It's such a simple concept, but it's hard to make it through the day without someone saying "you're on mute" or "could you please mute". I feel that's a significant source of friction that isn't a concern in face-to-face meetings.

I think these problems are all solvable, it will just take some time, and maybe require development of technologies that can have a deeper understanding of the media that is being shared.

LiveVideoStack: With Facebook going to Metaverse, what do you see as core technologies in the next 5 to 10 years to enable the Metaverse experience?

Justin Uberti: Maybe I am getting ahead of myself here, but it seems to me that the majority of metaverse interactions will be real-time interactions - after all, the real world is a continuous stream of real-time interactions. So a lot of the things that we have been working with over the past several years seem quite relevant, e.g., WebRTC, QUIC, spatial audio, video (or point clouds), low latency, media processing and understanding, etc.

LiveVideoStack: What technologies are you interested in now?

Justin Uberti: A lot of stuff you've mentioned! At Clubhouse I've worked on spatial and multichannel audio as well as real-time speech recognition and transcription, and I've been ruminating on aligning WebRTC with QUIC for a few years now.

I'm also super interested in AI for understanding and synthesis of media, as well as novel networking and cryptographic techniques.

LiveVideoStack: Last but not least, if you are given a chance to have a conversation with a computer scientist or a mathematician, who do you want to talk to most? What would you like to talk about?

Justin Uberti: Interesting question! If he were still alive, perhaps Claude Shannon, the mathematician and engineer whose work underpins pretty much all of today's Internet. What else did he imagine that we didn't have the technology to build at the time? What sort of second-order effects might follow from today's technologies?

Editor: Alex Li